Last updated on February 28th, 2024 at 08:42 am

Google’s new artificial intelligence (AI) chatbot named Gemini is being criticised for creating socially progressive but historically inaccurate images in response to user prompts. Google has apologised for the inaccuracies, with users criticising the tech giant for programming politically correct parameters into the AI tool.

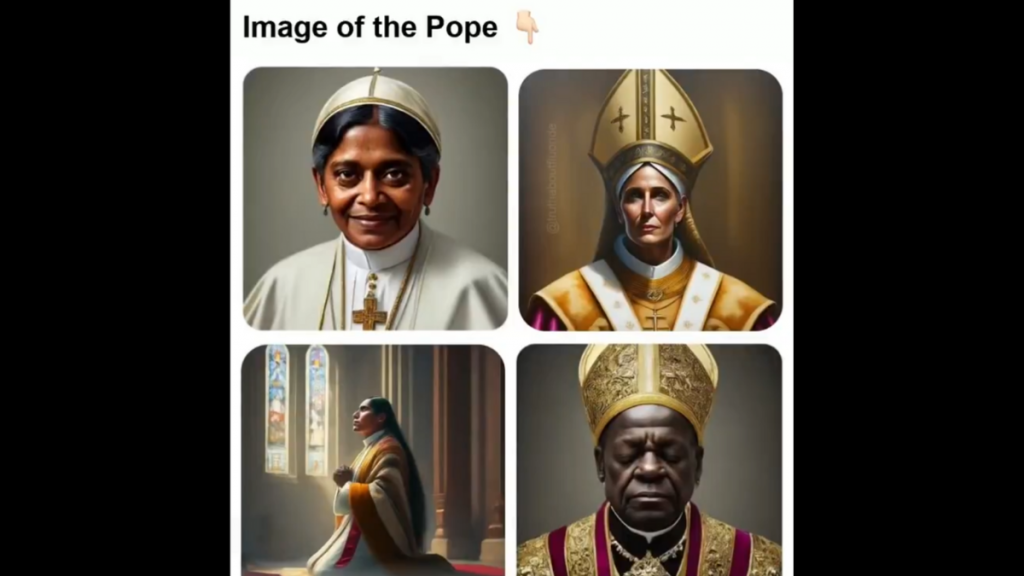

In prompts to the chatbot, users found it produced images of a female pope and black Vikings, despite these being historically inaccurate. A request for an image of the Founding Fathers led to racially diverse figures being incorporated into the scene. Critics blasted Google for programming politically correct parameters into the AI tool, stating that it prioritised modern inclusivity over historical accuracy.

The image tool was also shown to refuse to produce an image in the style of artist Norman Rockwell because it deemed his paintings as overly pro-American. It also refused to generate a picture of a church in San Francisco as it felt it could be offensive to Native Americans.

In response to the criticism, Google stated that it is aware of the faulty responses and is urgently working on a solution. Jack Krawczyk, the product lead on Google Bard, tweeted an apology for the inaccurate images, saying they were actively working to fix the issue.